Welcome to The Advanced Matrix Factorization Jungle

[ A living document on the state of the art matrix factorization algorithms and their numerous implementations ]

Table of Content

- Introduction

- Why this page ?

- Contributions

- Heuristics and Phase transitions

- Notations

- Randomized Algorithms ( Randomized Numerical Linear Algebra (RandNLA))

- Kernel Factorizations

- Spectral Clustering, A = DX with unknown D and X, solve for sparse X and X_i = 0 or 1

- K-Means / K-Median: A = DX with unknown D and X, solve for XX^T = I and X_i = 0 or 1

- Subspace Clustering, A = AX with unknown X, solve for sparse/other conditions on X

- Graph Matching: A = XBX^T with unknown X, B solve for B and X as a permutation

- NMF: A = DX with unknown D and X, solve for elements of D,X positive

-

Generalized Matrix Factorization,

W.*L − W.*UV′ with W a known mask, U,V unknowns solve for U,V and L lowest rank possible

- Matrix Completion, A = H.*L with H a known mask, L unknown solve for L lowest rank possible

- Stable Principle Component Pursuit (SPCP)/ Noisy Robust PCA, A = L + S + N with L, S, N unknown, solve for L low rank, S sparse, N noise

- Robust PCA : A = L + S with L, S unknown, solve for L low rank, S sparse

- Sparse PCA: A = DX with unknown D and X, solve for sparse D

- Dictionary Learning: A = DX with unknown D and X, solve for sparse X

- Archetypal Analysis: A = DX with unknown D and X, solve for D = AB with D, B positive

- Matrix Compressive Sensing (MCS), find a rank-r matrix L such that A(L) ~= b / or A(L+S) = b

- Multiple Measurement Vector (MMV) Y = A X with unknown X and rows of X are sparse.

- Compressive Sensing, Y = A X with unknown X and rows of X are sparse, X is one column.

- Blind Source Separation (BSS) Y = A X with unknown A and X and statistical independence between columns of X or subspaces of columns of X

- Partial and Online SVD/PCA

- Other factorizations

- D(T(.)) = L + E with unknown L, E and unknown transformation T and solve for transformation T, Low Rank L and Noise E

- Boolean Matrix Factorization

- Tensor Decomposition

- Frameworks featuring advanced Matrix factorizations

Introduction

Why this page ?

Contributions

The sources for this list include the following most excellent sites:

who provide more in-depth additional information. Additional implementations are featured on Nuit Blanche. The following people provided additional inputs to this page:

Your name can be listed here too if you provide relevant context/links to this document.

Heuristics and Phase transitions

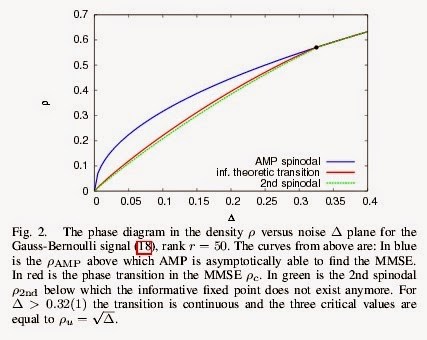

For each factorization identified in this document, I have added an item called Phase Transition that features papers, websites that have explored the set of parameters for which the heuristics of the implementation of these factorizations work or not. It turns out that in many cases these phase transitions are sharp. In compressive sensing, we call it the Donoho-Tanner phase transition for L_1 type of solvers. If you have found or written about a phase transition for any of these factorizations, please kindly let me know about it.

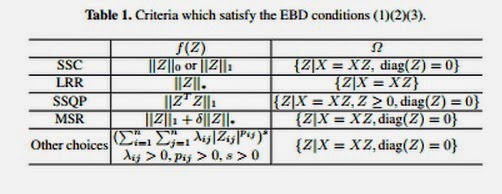

In particular, most of the algorithms listed below rely on using the heuristics of the nuclear norm as a proxy to the rank functional. It may not be optimal. Currently, CVX ( Michael Grant and Stephen Boyd) consistently allows one to explore other proxies than the rank functional such as the log-det as found by Maryam Fazell, Haitham Hindi, Stephen Boyd and others. One could argue that the phase transitions of these heuristics are probably the only way to really compare one implementation from another one and bring clarity to this jungle. Beyond clarity, phase transitions provide a unique navigational tool ( see The Map Makers) to the users.

Notations

In terms of notations, A, L, S and N refers to a general, a low rank, a sparse and a noisy matrix respectively. This page lists the different codes that implement some of these matrix factorizations: Matrix Completion, Robust PCA , Noisy Robust PCA, Sparse PCA, NMF, Dictionary Learning, MMV, Randomized Algorithms and other factorizations. Some of these toolboxes can sometimes implement several of these decompositions and are listed accordingly in each decomposition/factorization. Before appearing on this page, I generally feature these algorithms on Nuit Blanche under the MF tag: http://nuit-blanche.blogspot.com/search/label/MF .  You can also subscribe to the Nuit Blanche feed, You can also subscribe to the Nuit Blanche feed,

Randomized Algorithms ( Randomized Numerical Linear Algebra (RandNLA))

Phase Transitions:

Implementations:

Kernel Factorizations, Positive Kernel K(x,x') = G(f(x)*f'(x'))

(Reproducing Hilbert Kernel Spaces, Variable separation, Multipole methods,...)

Phase Transitions:

Implementations:

Spectral Clustering: A = DX with unknown D and X, solve for sparse X and X_i = 0 or 1

Phase Transitions:

Implementations:

- Numerous (specific operators representing the graph)

K-Means / K-Median: A = DX with unknown D and X, solve for XX^T = I and X_i = 0 or 1

Phase Transitions:

Subspace Clustering: A = AX with unknown X, solve for sparse/other conditions on X

Phase Transitions:

Implementations:

- LSR : Robust and Efficient Subspace Segmentation via Least Squares Regression by Canyi Lu, Hai Min, Zhong-Qiu Zhao, Lin Zhu, De-Shuang Huang, and Shuicheng Yan

- LRRSC : Subspace Clustering by Exploiting a Low-Rank Representation with a Symmetric Constraint by Jie Chen, Zhang Yi

- SSC : a_i = A x_i with x_i sparse and x_ii = 0, X is unknown, A is known: Sparse Subspace Clustering: Algorithm, Theory, and Applications by Ehsan Elhamifar, Rene Vidal. Sparse Subspace Clustering (SSC) is an algorithm based on sparse representation theory for segmentation of data lying in a union of subspaces.

- SMCE : A_ix_i = 0, 1^Tx_i = 1, Q_ix_i is sparse, X is unknown, A and Q(A) are known; Sparse Manifold Clustering and Embedding by Ehsan Elhamifar, Rene Vidal, Sparse Manifold Clustering and Embedding (SMCE) is an algorithm based on sparse representation theory for clustering and dimensionality reduction of data lying in a union of nonlinear manifolds.

- Local Linear Embedding (LLE)

Graph Matching: A = XBX^T with unknown X, B solve for X as a permutation

Phase Transitions:

Implementations:

Non-Negative Matrix Factorization / NMF: A = DX with unknown D and X,

solve for elements of D,X > 0

Phase Transitions:

Implementations:

- NonNegative matrix decomposition by Yangyang Xu and Wotao Yin (using the block-coordinate update method)

- NonNegative Matrix decomposition from partial observations by Yangyang Xu and Wotao Yin (using the block-coordinate update method)

- Near-Separable NMF: see Robust Near-Separable Nonnegative Matrix Factorization Using Linear Optimization by Nicolas Gillis, Robert Luce. CPLEX code (along with the code of other near-separable NMF algorithms, including a CPLEX implementation of Hottopixx) is available here.

- Hott Topixx: Factoring nonnegative matrices with linear programs by Victor Bittorf, Benjamin Recht, Christopher Ré, Joel Tropp (a CVX implementation by Nicolas Gillis can be found here, see Robustness Analysis of HottTopixx, a Linear Programming Model for Factoring Nonnegative Matrices))

- Super Beta-NTF 2000 by Antoine Liutkus, the beta_ntf module supports weighted parafac factorization of nonnegative numpy ndarray of arbitrary shape minimizing any β-divergence (Python)

- Complex Matrix Factorization Toolbox by Bryan King

- ANLS-NMF by Jingu Kim and Haesun Park

- Pre-NMF, sNMF: Sparse and Unique Nonnegative Matrix Factorization Through Data Preprocessing by Nicolas Gillis.

- Sparse NMF/PreProc-NMF: Sparse and Unique Nonnegative Matrix Factorization Through Data Preprocessing by Nicolas Gillis

- Practical NMF/NTF with beta divergence by Antoine Liutkus

- Itakura-Saito NMF: Majorization-Minimization Algorithm for Smooth Itakura-Saito NonNegative Matrix Factorization by Cedric Fevotte

- HALS: Accelerated Multiplicative Updates and Hierarchical ALS Algorithms for Nonnegative Matrix Factorization by Nicolas Gillis, François Glineur.

- SPAMS (SPArse Modeling Software) by Julien Mairal, Francis Bach, Jean Ponce,Guillermo Sapiro

- NMF: C.-J. Lin. Projected gradient methods for non-negative matrix factorization. Neural Computation, 19(2007), 2756-2779.Non-Negative Matrix Factorization: This page contains an optimized C implementation of the Non-Negative Matrix Factorization (NMF) algorithm, described in [Lee & Seung 2001]. We implement the update rules that minimize a weighted SSD error metric. A detailed description of weighted NMF can be found in[Peers et al. 2006].

- NTFLAB for Signal Processing, Toolboxes for NMF (Non-negative Matrix Factorization) and NTF (Non-negative Tensor Factorization) for BSS (Blind Source Separation)

- Non-negative Sparse Modeling of Textures (NMF) [Matlab implementation of NMF (Non-negative Matrix Factorization) and NTF (Non-negative Tensor), a faster implementation of NMF can be found here, here is a more recent Non-Negative Tensor Factorizations package]

Non-Negative Tensor Factorizations

Phase Transitions:

Implementations:

Generalized Matrix Factorization

W.*L − W.*UV′ with W a known binary mask, U,V unknowns solve for U,V and L lowest rank possible

Phase Transitions:

Implementations:

Matrix Completion, A = H.*L with H a known mask, L unknown solve for L lowest rank possible

Phase Transitions:

Implementations:

The idea of this approach is to complete the unknown coefficients of a matrix based on the fact that the matrix is known to be low rank in advance:

- HASI: Probabilistic Low-Rank Matrix Completion with Adaptive Spectral Regularization Algorithms by Adrien Todeschini, François Caron, Marie Chavent

- BiG-AMP: Bilinear Generalized Approximate Message Passing by Jason T. Parker, Philip Schniter, Volkan Cevher. The project page and code is here.

- Sparse Bayesian Methods for Low-Rank Matrix Estimation by S. Derin Babacan, Martin Luessi, Rafael Molina, Aggelos K. Katsaggelos. The code is here.

- Incremented Rank PowerFactorization (IRPF) by Justin Haldar and Diego Hernando

- ProPPA: ProPPA: A Fast Algorithm for $\ell_1$ Minimization and Low-Rank Matrix Completion by Ranch Y.Q. Lai, Pong C. Yuen

- Matrix ALPS by Anastasios Kyrillidis and Volkan Cevher

- GM: Efficient Matrix Completion with Gaussian Models by Flavien Léger, Guoshen Yu, and Guillermo Sapiro.

- LRGeomCG by Bart Vandereycken as featured in Low-rank matrix completion by Riemannian optimization

- TFOCS by Stephen Becker, Emmanuel J. Candès and Michael Grant. ( Matrix completion demo )

- GRASTA ( Grassmannian Robust Adaptive Subspace Tracking Algorithm ) by Laura Balzano and Jun He

- IRLS-M: Low-rank matrix recovery via iteratively reweighted least squares minimization by Massimo Fornasier and Holger Rauhut, Rachel Ward. An implementation of this algorithm is here.

- SubMF: Divide-and-Conquer Matrix Factorization by Lester Mackey, Ameet Talwalkar, Michael I. Jordan.

- RTRMC: RTRMC : A Riemannian trust-region method for low-rank matrix completion by Nicolas Boumal and Pierre-Antoine Absil

- Convex Optimization without Projection Steps by Martin Jaggi: Code example can be found here (in Java, see implementation paragraph for details).

- OptSpace: Matrix Completion from a Few Entries by Raghunandan H. Keshavan, Andrea Montanari, and Sewoong Oh

- LMaFit: Low-Rank Matrix Fitting by Zaiwen Wen, Wotao Yin, and Yin Zhang

- Penalty Decomposition Methods for Rank Minimization by Zhaosong Lu and Yong Zhang.The attendant MATLAB code is here.

- Jellyfish: Parallel Stochastic Gradient Algorithms for Large-Scale Matrix Completion, Benjamin Recht, Christopher Re, Apr 2011

- GROUSE: Online Identification and Tracking of Subspaces from Highly Incomplete Information, Laura Balzano, Robert Nowak, Benjamin Recht, 2010

- Matrix Completion via Thresholding by Angshul Majumdar

- SVP: Guaranteed Rank Minimization via Singular Value Projection, Raghu Meka, Prateek Jain, Inderjit Dhillon, 2009

- SET: SET: an algorithm for consistent matrix completion, W. Dai, O. Milenkovic, 2009

- NNLS: An accelerated proximal gradient algorithm for nuclear norm regularized least squares problems, Kim-Chuan Toh and Sangwoon Yun, 2009

- FPCA: Fixed point and Bregman iterative methods for matrix rank minimization, Shiqian Ma, Donald Goldfarb and Lifeng Chen, 2009

- SVT: A singular value thresholding algorithm for matrix completion by Jian-Feng Cai, Emmanuel Candes and Zuowei Shen, 2008

Stable Principle Component Pursuit (SPCP)/ Noisy Robust PCA, A = L + S + N with L, S, N unknown, solve for L low rank, S sparse, N noise

Phase Transitions:

Implementations:

Robust PCA : A = L + S with L, S unknown, solve for L low rank, S sparse

Phase Transitions:

Implementations:

- TGA (Trimmed Grassman Averages): Grassmann Averages for Scalable Robust PCA by Søren Hauberg, Aasa Feragen,Michael J. Black

- BiG-AMP: Bilinear Generalized Approximate Message Passing by Jason T. Parker, Philip Schniter, Volkan Cevher. The project page and code is here.

- Sparse Bayesian Methods for Low-Rank Matrix Estimation by S. Derin Babacan, Martin Luessi, Rafael Molina, Aggelos K. Katsaggelos. The code is here.

- Matrix ALPS by Anastasios Kyrillidis and Volkan Cevher

- TFOCS by Stephen Becker, Emmanuel J. Candès and Michael Grant. ( RPCA demo )

- SpaRCS: SpaRCS: Recovering Low-rank and Sparse matrices from Compressive Measurements by Andrew E. Waters, Aswin C. Sankaranarayanan, and Richard G. Baraniuk

- GRASTA ( Grassmannian Robust Adaptive Subspace Tracking Algorithm ) by Laura Balzano and Jun He

- DFC: Divide-and-Conquer Matrix Factorization by Lester Mackey, Ameet Talwalkar, Michael I. Jordan.

- DECOLOR: Moving Object Detection by Detecting Contiguous Outliers in the Low-Rank Representation by Xiaowei Zhou, Can Yang, Weichuan Yu.

- Convex Optimization without Projection Steps by Martin Jaggi: Code example can be found here (in Java, see implementation paragraph for details)

- LRR: Exact Subspace Segmentation and Outlier Detection by Low-Rank Representation by Guangcan Liu, Huan Xu, Shuicheng Yan. LRR example can be found here.

- Robust PCA : Two Codes that go with the paper "Two Proposals for Robust PCA Using Semidefinite Programming." by MichaleI Mccoy and Joel Tropp

- SPAMS (SPArse Modeling Software) by Julien Mairal, Francis Bach, Jean Ponce,Guillermo Sapiro.

- ADMM: Alternating Direction Method of Multipliers ‘‘Fast Automatic Background Extraction via Robust PCA’ by Ivan Papusha. The poster is here. The matlab implementation is here.

- LRSD: Sparse and Low-Rank Matrix Decomposition Via Alternating Direction Methods by Xiaoming Yuan, Junfeng Yang

- PCP: Generalized Principal Component Pursuit by Angshul Majumdar

- Augmented Lagrange Multiplier (ALM) Method [exact ALM - MATLAB zip] [inexact ALM - MATLAB zip], - The Augmented Lagrange Multiplier Method for Exact Recovery of Corrupted Low-Rank Matrices, Zhouchen Lin, Minming Chen, Yi Ma

- Accelerated Proximal Gradient , Reference - Fast Convex Optimization Algorithms for Exact Recovery of a Corrupted Low-Rank Matrix, Zhouchen Lin Arvind Ganesh,John Wright, Leqin Wu, Minning Chen, and Yi Ma [full SVD version - MATLAB zip] [partial SVD version - MATLAB zip]

- Dual Method [MATLAB zip], Reference - Fast Convex Optimization Algorithms for Exact Recovery of a Corrupted Low-Rank Matrix, Zhouchen Lin, Arvind Ganesh, John Wright, Leqin Wu, Minning Chen, and Yi Ma

- SVT: A singular value thresholding algorithm for matrix completion by Jian-Feng Cai, Emmanuel Candes and Zuowei Shen, 2008

- Alternating Direction Method [MATLAB zip] , Reference - Sparse and Low-Rank Matrix Decomposition via Alternating Direction Methods, Xiaoming Yuan, and Junfeng Yang (2009).

- LMaFit: Low-Rank Matrix Fitting by Zaiwen Wen, Wotao Yin, and Yin Zhang

- Bayesian robust PCA by Xinghao Ding, Lihan He, and Lawrence Carin

- Compressive-Projection PCA (CPPCA) Compressive-Projection Principal Component Analysis and the First Eigenvector, by James E. Fowler

Sparse PCA: A = DX with unknown D and X, solve for sparse D

- FSPCA: Sparse and Functional Principal Components Analysis by Genevera Allen. The code for the FSPCA is available here

- 24AM: 24 efficient parallel SPCA implementations by Peter Richtárik, Martin Takáč, Selin Damla Ahipaşaoğlu.(Alternating Maximization: Unifying Framework for 8 Sparse PCA Formulations and Efficient Parallel Codes)

- Sparse PCA using ALM method can be found here : From Sparse PCA via Augmented Lagrangian Methods and Application to Informaitve Feature Selection by Nikhil Naikal, Allen Y. Yang, S. Shankar Sastry

- Sparse Non-Negative Generalized PCA: From A Generalized Least Squares Matrix Decomposition by Genevera Allen, Logan Grosenick, Jonathan Taylor.

- Structured Sparse Principal Component Analysis by Rodolphe Jenatton, Guillaume Obozinski, Francis Bach.[code]

- SPAMs by Julien Mairal, Francis Bach, Jean Ponce,Guillermo Sapiro

- DSPCA: Sparse PCA using SDP byRonny Luss, Alexandre d'Aspremont, Laurent El Ghaoui Code is here.

- PathPCA: A fast greedy algorithm for Sparse PCA by Alexandre d'Aspremont, Francis Bach, Laurent El Ghaoui..The code is here.

Dictionary Learning: A = DX with unknown D and X, solve for sparse X

Phase Transitions:

Implementations:

Some implementations of dictionary learning implement the NMF also

- BiG-AMP: Bilinear Generalized Approximate Message Passing by Jason T. Parker, Philip Schniter, Volkan Cevher. The project page and code is here.

- CoefROMP: Improving Dictionary Learning: Multiple Dictionary Updates and Coefficient Reuse by Leslie N. Smith and Michael Elad.

- Learning Sparse Representations of High Dimensional Data on Large Scale Dictionaries by Zhen James Xiang Hao Xu Peter J. Ramadge. An implementation as a MATLAB Toolbox is here.

- OSDL (Online Structured Dictionary Learning, direct links to the toolbox:(.rar, .tar, .zip) ) by Zoltan Szabo

- BPFA: Nonparametric Bayesian Dictionary Learning for Analysis of Noisy and Incomplete Images by Mingyuan Zhou, Haojun Chen, John Paisley, Lu Ren, Lingbo Li, Zhengming Xing, David Dunson, Guillermo Sapiro and Lawrence Carin.

- Online Learning for Matrix Factorization and Sparse Coding by Julien Mairal, Francis Bach, Jean Ponce,Guillermo Sapiro [The code is released as SPArse Modeling Software or SPAMS]

- Dictionary Learning Algorithms for Sparse Representation (Matlab implementation of FOCUSS/FOCUSS-CNDL is here)

- Multiscale sparse image representation with learned dictionaries [Matlab implementation of the K-SVD algorithm is here, a newer implementation by Ron Rubinstein is here ]

- Efficient sparse coding algorithms [ Matlab code is here ]

- Shift Invariant Sparse Coding of Image and Music Data. Matlab implemention is here

- Shift-invariant dictionary learning for sparse representations: extending K-SVD.

- Thresholded Smoothed-L0 (SL0) Dictionary Learning for Sparse Representations by Hadi Zayyani, Massoud Babaie-Zadeh and Remi Gribonval.

- Non-negative Sparse Modeling of Textures (NMF) [Matlab implementation of NMF (Non-negative Matrix Factorization) and NTF (Non-negative Tensor), a faster implementation of NMF can be found here, here is a more recent Non-Negative Tensor Factorizations package]

Archetypal Analysis: A = DX with unknown D and X, solve for D = AB with D, B positive

Phase Transitions:

Matrix Compressive Sensing (MCS), Find a rank-r matrix L such that A(L) ~= b / or A(L+S) = b

Phase Transitions:

Implementations:

Multiple Measurement Vector (MMV) Y = A X with unknown X and rows of X are sparse.

Compressive Sensing/SMV, Y = A X with unknown X and rows of X are sparse, X is one column.

Compressive sensing is a special case of MMV called Single Measurement Vector (SMV)

Phase Transitions:

Blind Source Separation (BSS) Y = A X with unknown A and X and statistical independence between columns of X or subspaces of columns of X

Phase Transitions:

Implementations:

Include Independent Component Analysis (ICA), Independent Subspace Analysis (ISA), and Sparse Component Analysis (SCA). There are many available codes for ICA and some for SCA. Here is a non-exhaustive list of some famous ones (which are not limited to linear instantaneous mixtures). TBC

ICA:

SCA:

Partial and Online SVD/PCA

Phase Transitions:

Implementations:

Online SVD

Partial SVDs:

Other factorization

D(T(.)) = L + E with unknown L, E and unknown transformation T and solve for transformation T, Low Rank L and Noise E

Phase Transitions:

Implementations:

Boolean Matrix Factorization Tensor Decomposition

Phase Transitions:

Implementations:

Tensor Decomposition

Frameworks featuring advanced Matrix factorizations

For the time being, few have integrated the most recent factorizations.

Books

Sources

Relevant links

|

留下你的评论